Download C Program For Convolutional Code

Convolutional Coding. Convolutional codes are a bit like the block codes discussed in the previous lecture in that they involve the transmission of parity bits. Convolutional Coding. Convolutional codes are a bit like the block codes discussed in the previous lecture in that they involve the transmission of parity bits.

C/C++: Convolution Source Code. Wedding Album Design Software Full Version on this page. In mathematics and, in particular, functional analysis, convolution is a mathematical operation on two functions f and g, producing a. Unsupervised Feature Learning and Deep Learning Tutorial. In this exercise you will implement a convolutional neural network for digit classification.

Simulation Source Code Examples--Tutorial on Convolutional Coding with Viterbi Decoding--Simulation Source Code Examples Read about how the family of Chinese prime minister Wen JiaBao enriched themselves by as much as US$2.7 Billion during his tenure as leader on the New York Times website: If you're behind the so-called 'Great Firewall,' try the BBC News website: or the Washington Post website: You can also try to read the pdf version in Chinese: Simulation Source Code Examples The simulation source code comprises a test driver routine and several functions, which will be described below. This code simulates a link through an AWGN channel from data source to Viterbi decoder output. The first dynamically allocates several arrays to store the source data, the convolutionally encoded source data, the output of the AWGN channel, and the data output by the Viterbi decoder.

It calls the data generator, convolutional encoder, channel simulation, and Viterbi decoder functions in turn. It then compares the source data output by the data generator to the data output by the Viterbi decoder and counts the number of errors. Once 100 errors (sufficient for +/- 20% measurement error with 95% confidence) are accumulated, the test driver displays the BER for the given Es/No. The test parameters are controlled by definitions in.

The test driver includes a compile-time option to also measure the BER for an uncoded channel, i.e. A channel without forward error correction. I used this option to validate my Gaussian noise generator, by comparing the simulated uncoded BER to the theoretical uncoded BER given by, where E b/N 0 is expressed as a ratio, not in dB. I am happy to say that the results agree quite closely. When running the simulations, it is important to remember the relationship between E s/N 0 and E b/N 0. As stated earlier, for the uncoded channel, E s/N 0 = E b/N 0, since there is one channel symbol per bit. However, for the coded channel, E s/N 0 = E b /N 0 + 10log 10(k/n).

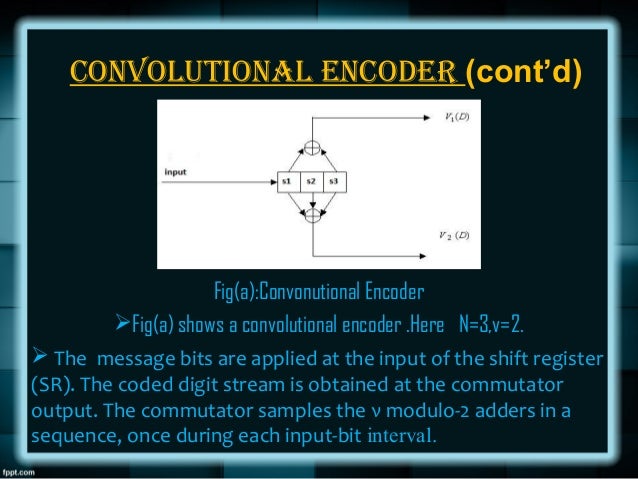

For example, for rate 1/2 coding, E s/N 0 = E b/N 0 + 10log 10(1/2) = E b/N 0 - 3.01 dB. For rate 1/8 coding, E s/N 0 = E b/N 0 + 10log 10(1/8) = E b/N 0 - 9.03 dB. The function simulates the data source. It accepts as arguments a pointer to an input array and the number of bits to generate, and fills the array with randomly-chosen zeroes and ones. The function accepts as arguments the pointers to the input and output arrays and the number of bits in the input array. It then performs the specified convolutional encoding and fills the output array with one/zero channel symbols.

The convolutional code parameters are in the header file. Imovie For Windows 7 Full Version. The function accepts as arguments the desired E s/N 0, the number of channel symbols in the input array, and pointers to the input and output arrays. It performs the binary (one and zero) to baseband signal level (+/- 1) mapping on the convolutional encoder channel symbol outputs. It then adds Gaussian random variables to the mapped symbols, and fills the output array. The output data are floating point numbers. The arguments to the function are the expected E s/N 0, the number of channel symbols in the input array, and pointers to its input and output arrays.

First, the decoder function sets up its data structures, the arrays described in the algorithm description section. Then, it performs three-bit soft quantization on the floating point received channel symbols, using the expected E s/N 0, producing integers. (Optionally, a fixed quantizer designed for a 4 dB E s/N 0 can be chosen.) This completes the preliminary processing. The next step is to start decoding the soft-decision channel symbols. The Complete Turtletrader Rapidshare. The decoder builds up a trellis of depth K x 5, and then traces back to the beginning of the trellis and outputs one bit. The decoder then shifts the trellis left one time instant, discarding the oldest data, following which it computes the accumulated error metrics for the next time instant, traces back, and outputs a bit.